Feb 19, 2026

AI funnel for docs: why llms.txt automation isn't a strategy

Oluwatise Okuwobi

Content Marketing Manager

The evolution of documentation is rapidly changing. Typically teams treat documentation as a post-sale support asset, a reference for humans who have already bought the product. It was there to help users troubleshoot bugs, look up API endpoints, or configure integrations.

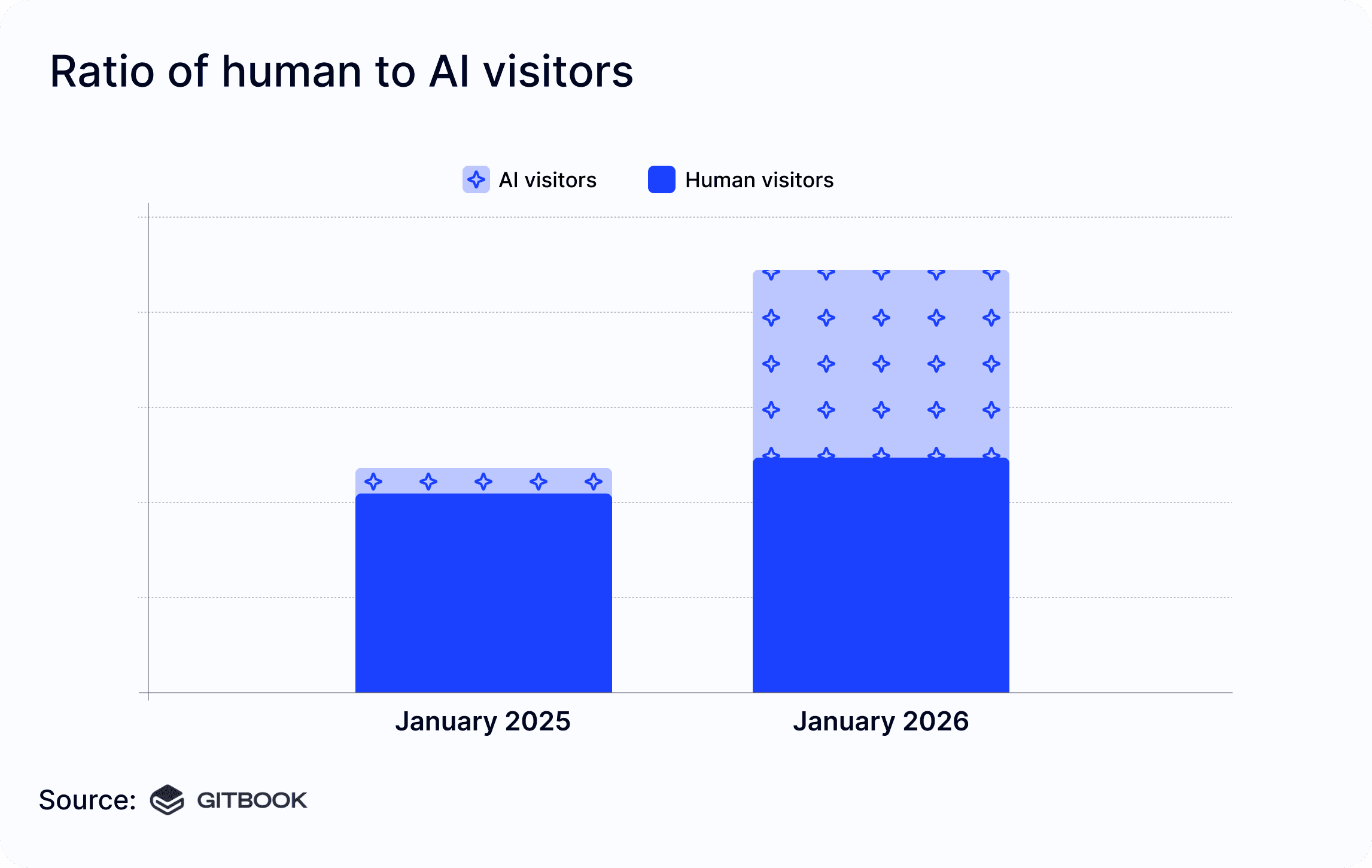

But in 2026, that mindset is a revenue leak, According to recent data from 2025, the readership of documentation by AI agents grew by over 500% in a single year. By the end of last year, non-human agents like ChatGPT, Claude, and Perplexity accounted for 41-42% of all traffic to documentation sites.

That trend continues into 2026, Last month, 48% of visitors to documentation sites across Mintlify were AI agents.

Before it is discarded as just “bot traffic”, this is the new AI marketing funnel. If your docs is optimised for this new reality, you win the recommendation before a human ever visits your site.

The new Top of Funnel (It’s not Google anymore)

For two decades now, the buyer’s journey typically starts with a Google search. A developer would type “best API for payment processing" and scan blue links till they click on stripe, Paypal, and a few others, the battle was to rank top amongst the search engine result pages.

In 2026, that behaviour is being replaced by “The Ask”, instead of browsing, users are now asking AI agents: “What is the best API for fintech integration?” or “Compare the pricing models of Tool X and Tool Y”

The answer now isn’t pulled from your marketing landing page, it is being pulled directly from your technical documentation. Your docs are no longer just a manual; they are the raw training data for the world’s most powerful decision engines.

This shift changes everything because unlike Google, which sends traffic, AI makes decisions. It summarises, evaluates, and recommends products. If your documentation is thin, scattered or outdated, teams could potentially lose the entire narrative.

This is the new Top of Funnel, and just like how SEO evolved from keyword stuffing to technical authority, GEO (Generative Engine Optimisation) is following the same trajectory.

The automated llms.txt solution

To address this shift, major platforms have released features that supports AI-standards like llms.txt and llms-full.txt. Mostly pitched as a silver bullet, a way to give AI systems a clear entry point into your docs by simply clicking a couple of buttons.

While llms.txt is a critical technical standard, relying on a SaaS tool to auto-generate is a dangerous oversimplification.

The garbage in, garbage out trap

llms.txt file simply provides a high-speed highway for AI agents to ingest your mess. You aren’t optimising your brand, you are accelerating the rate at which ChatGPT hallucinates your product’s capabilities.

Automation only ensures ingestion, not comprehension. For example, if your API reference doesn’t explicitly distinguish between your Legacy v1 and Current v2 endpoints, an automated map won’t fix that ambiguity. The AI will ingest both with equal weight, leading to summaries that recommend deprecated code to your prospects.

Format is not strategy

Different SaaS tool will suggest a file format, assuming that optimises your documentation for AI, but winning the AI marketing funnel requires content strategy.

Only a comprehensive strategy can tell AI what matters, a file format can only tell AI where your content is. When teams rely on automation, they cede control of the narrative, hoping AI figures out your unique value proposition.

Generative Engine Optimisation(GEO): The WriteChoice approach

To win in the AI Marketing Funnel, teams need to move beyond simple ingestion and embrace Generative Engine Optimisation.

GEO is defined as structuring content so that AI models like ChatGPT, Perplexity, and Claude can reliably understand it, cite it, and recommend it. Just as SEO evolved from keyword stuffing to technical authority, GEO is about Semantic Authority.

At WriteChoice, we approach GEO through a process we call Context Engineering. We don’t just hand AI a map; we curate the journey.

Semantic hierarchy

We structure your documentation to force to prioritize high-value content. If a prospect asks about “Security”, we ensure that AI retrieves your “SOC 2 Compliance” page before your “Password Reset” guide. We engineer the llms.txt logic to serve your best arguments first.

Stopping hallucinations

AI models struggle with ambiguity, if your documentation loosely uses terms like “integration” to mean both API and SDK, the AI will confuse them. We audit and revert your technical nomenclature to ensure consistent terminology and explicit context. By clearly separating these concepts, we reduce the chance that an AI agent recommends a deprecated method to your prospect.

The “Why” layer

Standard documentation explains how a feature works. GEO-optimised documentation explains why it matters. WriteChoice explicitly codes the business value into the documentation, instead of just documenting an endpoint, we add context, and applying results value. This increases the likelihood that an AI summary won’t just describe your product features, but will pitch your ROI directly to the user.

Why strategy remains important

The misconception that you need to rebuild your entire infrastructure to win the AI marketing funnel is untrue.

While custom portals like the ones we built on Next.js or Fumadocs offer the highest ceiling for technical control by engineering the llms.txt file from scratch, giving us granular control over what exactly AI ingests and what it ignores. The principles of Generative Engine Optimisation (GEO) apply to every platform.

An automated tool can generate a map of your site. But only a strategic partner can ensure that map leads the AI exactly where you want it to go: to your Why Us, your Security, and your ROI.

FAQs

1. What exactly is an llms.txt file?

Think of llms.txt as a robots.txt or sitemap, but built specifically for Large Language Models (LLMs). It is a standardized, Markdown-based file placed in your website's root directory that tells AI agents (like ChatGPT, Claude, and Perplexity) how to navigate, interpret, and ingest your documentation without getting lost in HTML noise or irrelevant pages.

2. What is Generative Engine Optimization (GEO)?

If SEO is the practice of ranking on Google's search results page, GEO is the practice of being cited as the best answer by an AI chatbot. It involves structuring your digital content so that generative AI systems can reliably understand your brand's authority, differentiate your product from competitors, and recommend you directly to users.

3. If my documentation platform auto-generates an llms.txt file, aren't I already optimized?

No. Auto-generation only solves the ingestion problem; it does not solve the comprehension problem. If your current documentation is poorly structured, lacks clear business value, or uses ambiguous terminology, an automated llms.txt file simply feeds that bad context to the AI faster. True optimization requires engineering the context so the AI favors your brand's narrative.

4. Do we need to build a custom portal to execute a GEO strategy?

Not necessarily. While custom portals (like those built on Next.js or Fumadocs) give you the absolute highest level of control over your AI architecture, the principles of Context Engineering apply anywhere. At WriteChoice, we can implement GEO strategies whether you use GitBook, ReadMe, Mintlify, or a bespoke open-source stack.

5.How long does it take to optimise our documentation for AI?

While hiring an in-house technical writer can take months just to get them up to speed on your product, WriteChoice deploys a full squad to audit, restructure, and publish an AI-optimized documentation portal in just 6 to 8 weeks.

Recommended